Do you remember playing a game called “telephone” (or “Chinese Whispers”) as a kid?

The game is simple: The first player comes up with a short sentence like “Alice and Bob walked to the bakery”. They then whisper this sentence to the second player, who then whispers to the third player etc.

The fun part happens at the end, when the final player tells everyone what they heard, and everyone usually laughs because the sentence has changed a lot when passed from ear to ear, e.g. “Alice ran after Bob who stole her cake”1

What if we made a pair of AI systems play a similar game of communication with each other — but with a small twist: Instead of communicating with voices, the systems communicate by text and images.

The game would go like this:

- I choose a real image and write a one-sentence description of the image, just to make sure the first input is “real”.

- AI 1 — a text-to-image generator — would take the description and turn it into an image.

- AI 2 — an image-to-text generator — would take the image from step 2 and create a new image description.

- Go back to step 2 and feed this image description to AI 1.

Without further ado, let us try it out, and we will get to the technology later in the post.

For the first image, I chose this picture with the description “dog standing on a grass hill with a yellow field in the background”:

With both the image and the description as the first inputs to the image generator, I got the following series of images and text descriptions:

The above image was interpreted by AI 2 as “a dog on green grass” (not bad actually) and that description was fed back to AI 1 to produce:

This image was interpreted by AI 2 as “a bunch of atm food” which doesn’t make sense, but who am I to judge, so back it went to AI 1, and we got the following image:

Yeah that looks like bread, sausages and an… “AMT”? Not quite an ATM, but hey, it’s close. This image was interpreted as “a display case are sitting on a kitchen table” and based on this, we get our final image:

I think it tried to draw cameras and a cellphone, but it is a bit abstract. This image is interpreted as “a few on a wall”.

And there you have it. We went from “dog standing on a grass hill with a yellow field in the background” to “a few on a wall” and from a nice summer image of my dog to a display of electronic devices?

It is worth noting that the above is just one of many possible outcomes from the same starting point. The models use randomness in their configurations, which means that the end result is almost never the same. It might be interesting to automate the process in the future.

For now, I hope you just enjoyed this little experiment.

The tech behind

As mentioned in the introduction, the “game” consists of two deep learning (“AI”) systems. I actually already wrote a post about one of them, the image captioning model from the Tensorflow tutorial. This is what I called “AI 2”, and it can take an image and produce a caption for it.

For this experiment, I let the model train a bit longer than in the previous post, but I did not really evaluate it, so the captions are still hit or miss. However, you can see from its first interpretation “a dog on green grass” that it is not terrible.

The image generator (“AI 1”) is the new and shiny thing here. It is called VQGAN+CLIP, and it actually consists of two models that work together to produce an image from a piece of text. The specific version I am using here is based on work by Katherine Crowson found in this vqgan-clip repository on Github (specifically the notebook with the z+quantize method).

For this experiment, I let the system run for a few minutes before stopping it and taking the produced image. I do not fully understand how the VQGAN+CLIP system works, and it is probably also beyond the scope of this post to discuss it, but I encourage you to search for examples online.

Its creations are often abstract with a hint of reality, so they end up looking quite surreal and sometimes disturbing. This blog post about “AI movie posters” is what got me interested in VQGAN+CLIP, and I might explore it a bit more in the future as well.

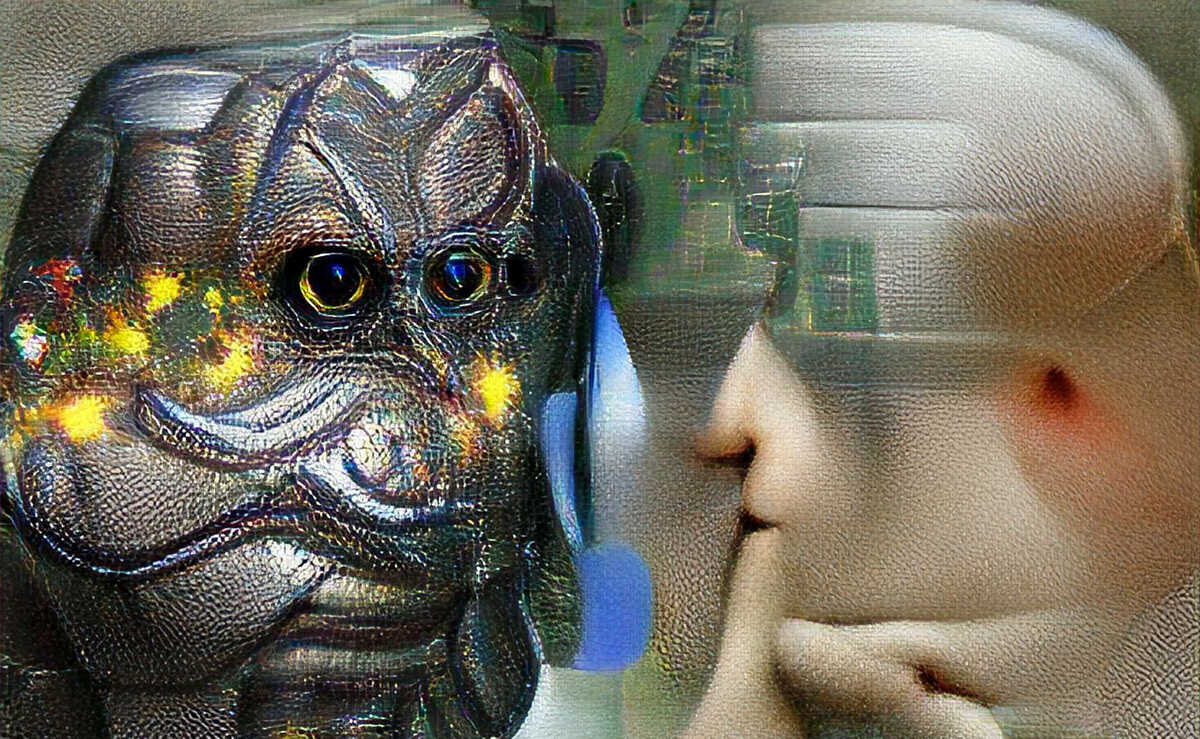

By the way, the image at the beginning of this post is also made by VQGAN+CLIP from the text “An artificial intelligence whispers to another artificial intelligence”, and it is scaled up with a super resolution neural network called esrgan. Good stuff!

1 comment