I recently launched a new website called AI Tales, where I share small snippets of text, generated by AI, edited by me. AI Tales is going to be my playground for sharing pieces of text that I find “interesting” in one way or another. I will try to update it regularly with new content. We… Continue reading AI Tales

Category: Projects

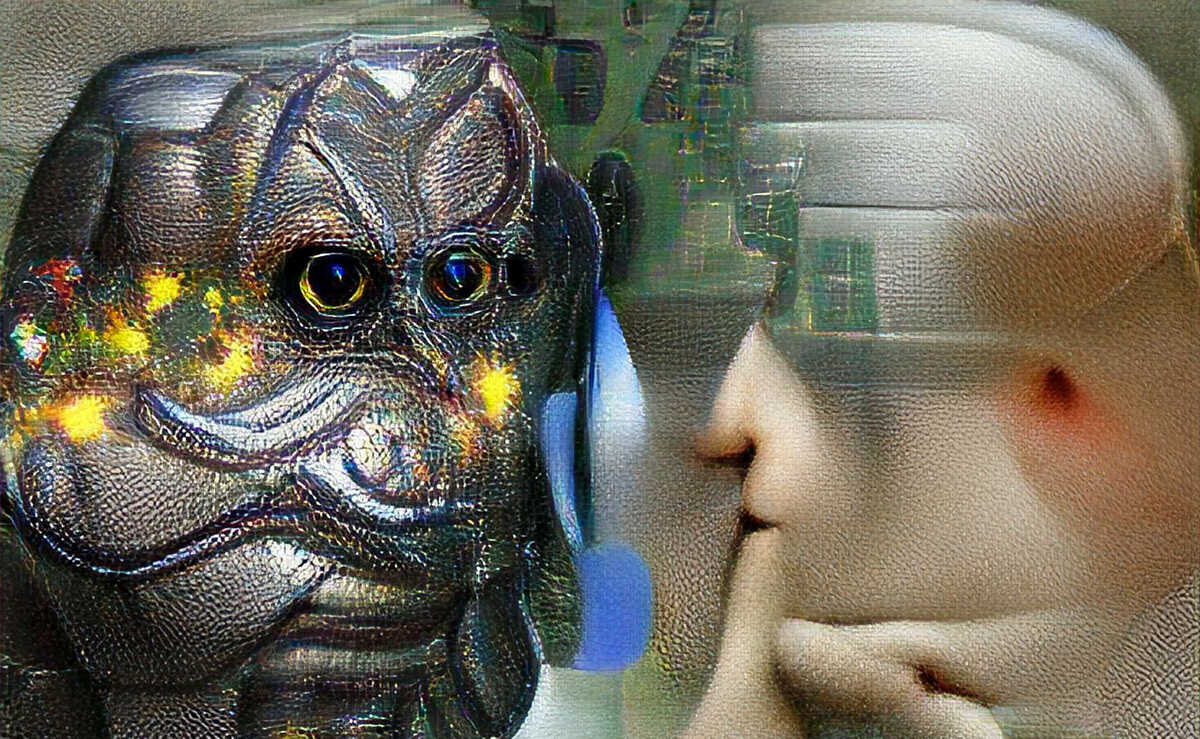

A game of (AI) telephone

Do you remember playing a game called “telephone” (or “Chinese Whispers”) as a kid? The game is simple: The first player comes up with a short sentence like “Alice and Bob walked to the bakery”. They then whisper this sentence to the second player, who then whispers to the third player etc. The fun part… Continue reading A game of (AI) telephone

Lyrics generator talk

As part of a recent talk I did about my now-quite-old lyrics generator, I used the opportunity to update the code a bit and share a few of the trained models, including the world famous Gene Lyrica One. Pre-trained lyrics generator models Source code for lyrics generator The new “rock” models have similar performance to… Continue reading Lyrics generator talk

Image captioning model

Progress is slow for my various hobby efforts to generate images, create lyrics or even detect my dog in an image, but I do still experiment a little bit when I have the time for it. Today, I ran through the image captioning tutorial from Tensorflow, because why not. I trained it on a reduced… Continue reading Image captioning model

Dipping the feet in the game design pond

For my wife’s birthday this year, I created a prototype of a small game-like 3D environment that she could “walk around” in using the keyboard and mouse. The idea was to have an “exhibit” for each year we have known each other, consisting of a few photos from that year as well as a short… Continue reading Dipping the feet in the game design pond